A coupling inversion tutorial¶

[1]:

# Import externals

import numpy as np

import copy

import os

# Colormaps

from cmcrameri import cm

# Import personal libraries

import csi.TriangularPatches as triangleFault

import csi.fault3D as rectFault

import csi.transformation as transformation

import csi.geodeticplot as geoplt

import csi.gps as onegps

import csi.insar as insar

import csi.multifaultsolve as multiflt

Some informations required¶

[2]:

# Here we just provide some elements needed for the code to run

# Origin of the local coordinate system

lon0 = -70.

lat0 = -20.

# Geometry

faultGeometry = os.path.join(os.getcwd(), 'DataAndModels/InversionCoupling/megathrust.gocad')

edgeGeometry = os.path.join(os.getcwd(), 'DataAndModels/InversionCoupling/edgefault.txt')

# Data GNSS

gnssData = os.path.join(os.getcwd(), 'DataAndModels/InversionCoupling/Northern.enu')

sarData = {'filename': os.path.join(os.getcwd(), 'DataAndModels/InversionCoupling/insar'),

'sigma': 0.316663320126,

'lambda':6.94320681868}

# Convergence from Metois et al 2012

# 67 mm/yr, azimuth 78deg

# minus the sliver motion --> 56 mm/yr

convergence = [78., 56.]

Building the fault objects¶

[3]:

# We first initialize a fault with triangular patches

fault = triangleFault('Megathrust', lon0=lon0, lat0=lat0)

# We read the triangular patches from the output format of the Gocad software.

# There is other reading methods

fault.readGocadPatches(faultGeometry, utm=False,factor_depth=1.)

# We initialize the slip on the fault with zeros

fault.initializeslip(values='depth')

# We build the trace of the fault. The shallowest patches are

fault.setTrace(delta_depth=3)

fault.trace2ll()

# For further plotting

fault.linewidth=2

fault.color='r'

---------------------------------

---------------------------------

Initializing fault Megathrust

[4]:

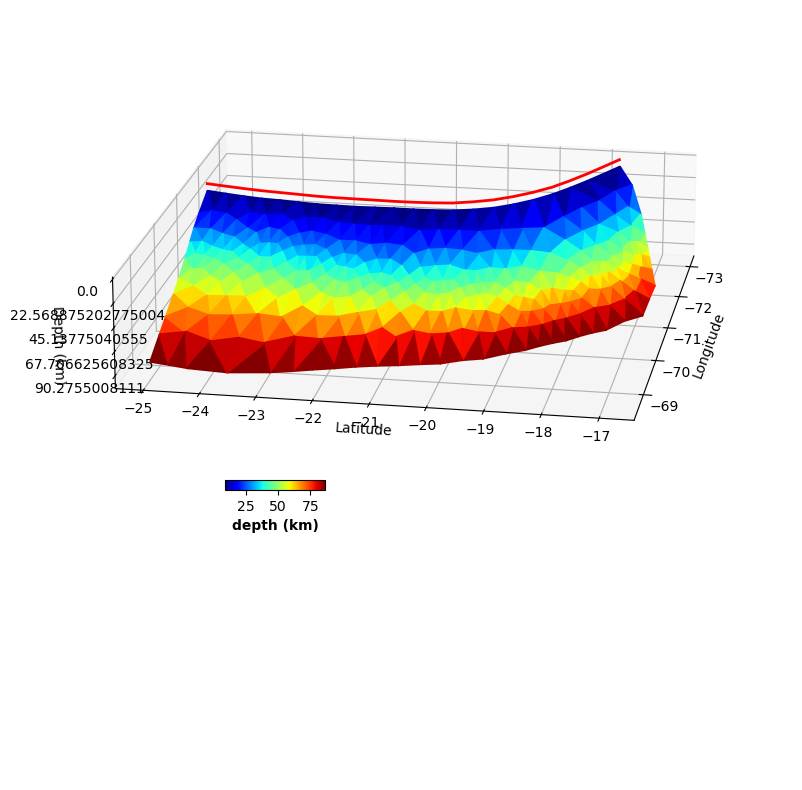

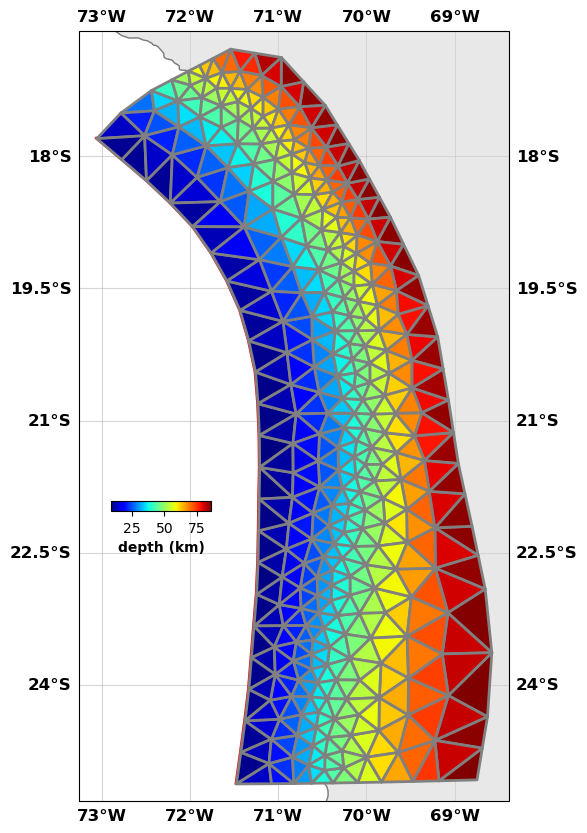

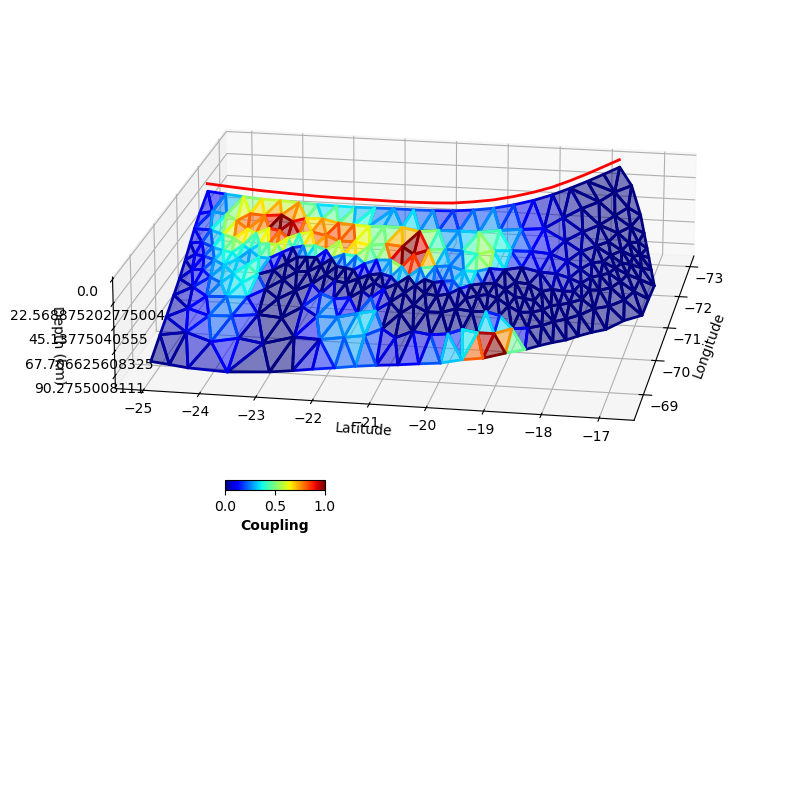

fault.plot(figsize=((10,10),(10,10)), view={'elevation': 20, 'azimuth': 10, 'shape': (1, 1, 0.3)}, cbaxis=[0.33, 0.4, 0.1, 0.01], cblabel='depth (km)')

[5]:

# The coupling problem has a lot of edge effects so we add some elements on the side to absorb them

edge = rectFault('Edges', lon0=lon0, lat0=lat0)

# This is a method reading from a text file

edge.readPatchesFromFile(edgeGeometry, readpatchindex=False, donotreadslip=True)

---------------------------------

---------------------------------

Initializing fault Edges

Building the data objects¶

[6]:

# Create the gnss object

gnss = onegps('GNSS data', lon0=lon0, lat0=lat0)

# Read the data

gnss.read_from_enu(gnssData, factor=1., header=1, checkNaNs=False)

# We don't want to use the vertical component

gnss.vel_enu[:,2] = np.nan

# We build a covariance matrix for the east and north components

gnss.buildCd(direction='en')

---------------------------------

---------------------------------

Initialize GPS array GNSS data

Read data from file /Users/romainjolivet/MYBIN/csi/notebooks/DataAndModels/InversionCoupling/Northern.enu into data set GNSS data

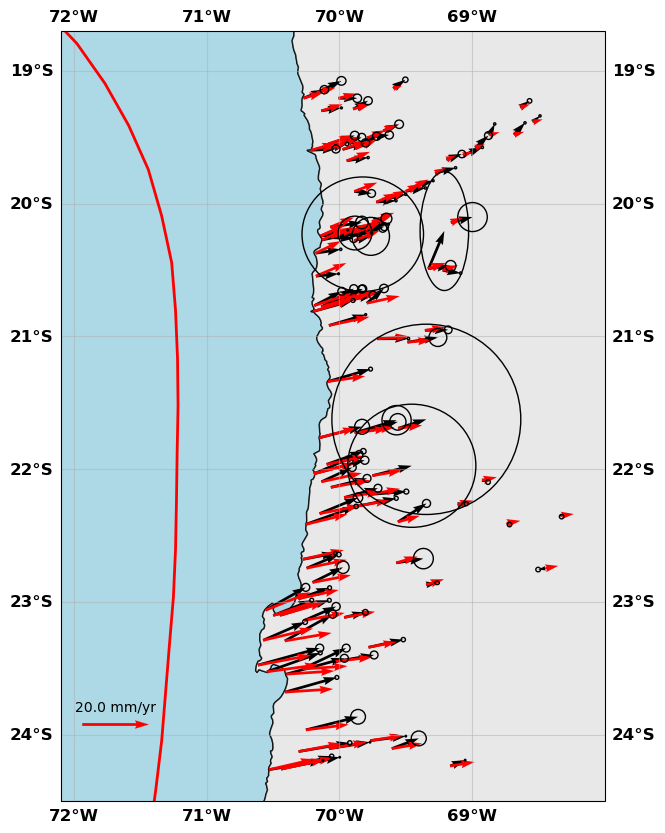

[7]:

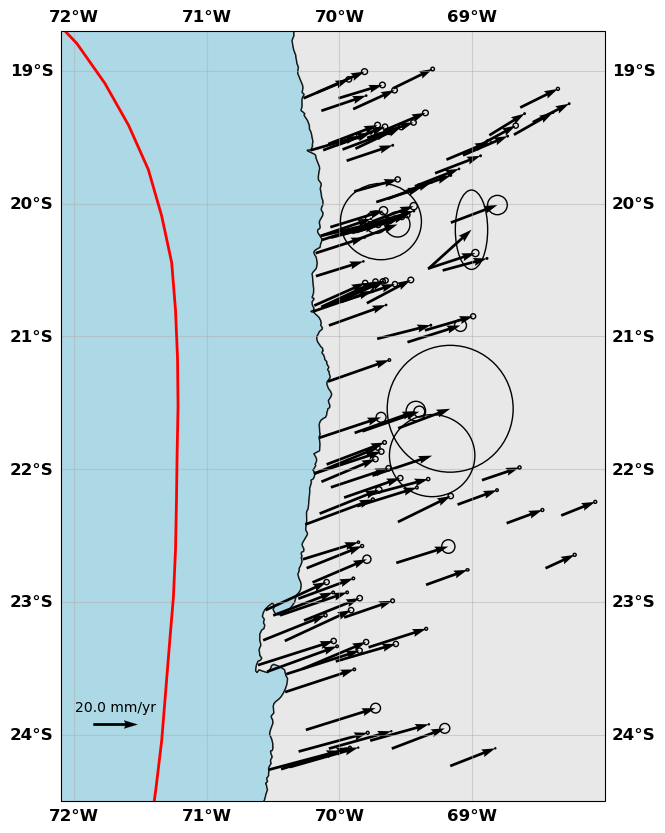

# Show me

gnss.plot(faults=fault, figsize=(10,10), seacolor='lightblue', title=None, box=[-72.1, -68., -24.5, -18.7], legendscale=20., legendunit='mm/yr', scale=60)

[8]:

# Create an insar object.

sar = insar('InSAR data', lon0=lon0, lat0=lat0)

# read the data from the downsampled file. We have downsampled the full res InSAR velocity map

# See the InSAR tutorial to see how that works

sar.read_from_varres(sarData['filename'], factor=1.0)

# We build a data covaraince matrix using the information from the covariance calcualtion

# See the InSAR tutorial to see how that works

sar.buildCd(sarData['sigma'], sarData['lambda'])

---------------------------------

---------------------------------

Initialize InSAR data set InSAR data

Read from file /Users/romainjolivet/MYBIN/csi/notebooks/DataAndModels/InversionCoupling/insar into data set InSAR data

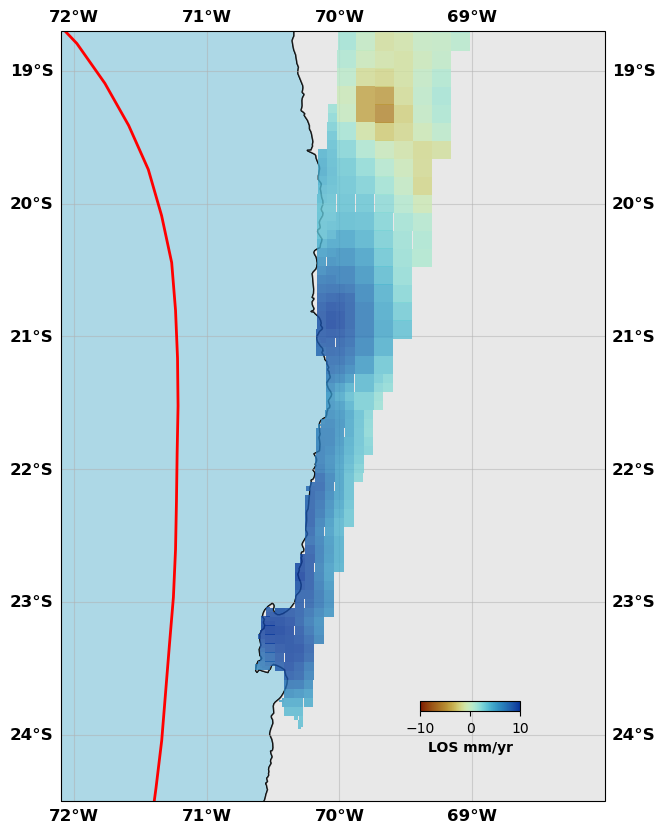

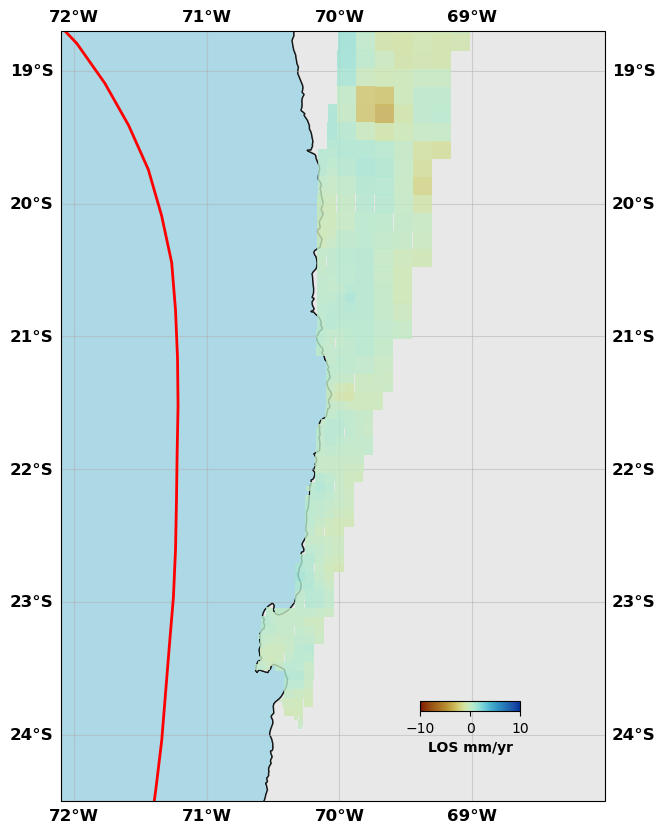

[9]:

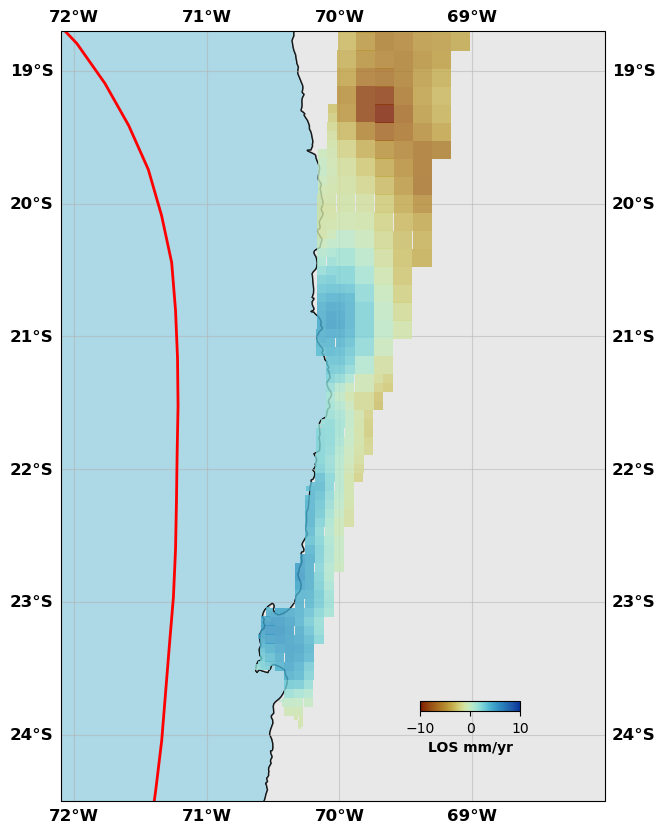

# Show me

sar.plot(plotType='decimate', faults=fault, box=[-72.1, -68., -24.5, -18.7], edgewidth=0, figsize=(10,10), cmap=cm.roma, norm=(-10,10),

colorbar=True, cbaxis=[0.6, 0.2, 0.1, 0.01], cblabel='LOS mm/yr', seacolor='lightblue', alpha=0.8)

Create a transformation object¶

[10]:

# Initialize transformation object

transform = transformation('All transforms', lon0=lon0, lat0=lat0)

---------------------------------

---------------------------------

Initializing transformation All transforms

Build the Green’s functions¶

[11]:

# Build the GFs for the transformation part

transform.buildGFs([gnss, sar], ['translationrotation', 1])

[12]:

# The slip direction is 'c' in reference for coupling.

# Convergence is here a tuple of direction and convergence rate. it can be an array the size of the numnber of patches with 2 columns

fault.buildGFs(gnss, method='meade', vertical=False, slipdir='c', convergence=convergence)

fault.buildGFs(sar, method='meade', vertical=True, slipdir='c', convergence=convergence)

edge.buildGFs(gnss, method='okada', vertical=False, slipdir='c', convergence=convergence)

edge.buildGFs(sar, method='okada', vertical=True, slipdir='c', convergence=convergence)

Greens functions computation method: meade

---------------------------------

---------------------------------

Building Green's functions for the data set

GNSS data of type gps in a homogeneous half-space

Patch: 556 / 556

Greens functions computation method: meade

---------------------------------

---------------------------------

Building Green's functions for the data set

InSAR data of type insar in a homogeneous half-space

Patch: 556 / 556

Greens functions computation method: okada

---------------------------------

---------------------------------

Building Green's functions for the data set

GNSS data of type gps in a homogeneous half-space

Patch: 8 / 8

Greens functions computation method: okada

---------------------------------

---------------------------------

Building Green's functions for the data set

InSAR data of type insar in a homogeneous half-space

Patch: 8 / 8

Solver¶

Assembling the problem¶

[13]:

# We need now to assemble the problem

# Green's functions fault

edge.assembleGFs([gnss, sar], slipdir='c')

fault.assembleGFs([gnss, sar], slipdir='c')

transform.assembleGFs([gnss, sar])

# Data vectors

edge.assembled([gnss, sar])

fault.assembled([gnss, sar])

transform.assembled([gnss, sar])

# Data covariance matr

edge.assembleCd([gnss, sar])

fault.assembleCd([gnss, sar])

transform.assembleCd([gnss, sar])

---------------------------------

---------------------------------

Assembling G for fault Edges

Dealing with GNSS data of type gps

Dealing with InSAR data of type insar

---------------------------------

---------------------------------

Assembling G for fault Megathrust

Dealing with GNSS data of type gps

Dealing with InSAR data of type insar

---------------------------------

---------------------------------

Assembling G for transformation All transforms

---------------------------------

---------------------------------

Assembling d vector

Dealing with data GNSS data

Dealing with data InSAR data

---------------------------------

---------------------------------

Assembling d vector

Dealing with data GNSS data

Dealing with data InSAR data

---------------------------------

---------------------------------

Assembling d vector

Dealing with data GNSS data

Dealing with data InSAR data

Regularization¶

There is no fancy regularization implemented yet in CSI since I do all my slip inversions with AlTar (which is designed to avoid smoothing and other dampings). Here, we only have the approach by Radiguet et al 2010.

[14]:

# Build a model covariance

# For the fault, we use a 10 mm sigma and a 20 km smoothing distance

fault.buildCm(1., 20.)

# For the edges, we use a 10 mm sigma and a 40 km smoothing distance

edge.buildCm(1., 40.)

# For transofmr, we use a Gaussian prior with a 100 variance (large)

transform.buildCm(100.)

---------------------------------

---------------------------------

Assembling the Cm matrix

Sigma = 1.0

Lambda = 20.0

Lambda0 = 1.7946601468315058

---------------------------------

---------------------------------

Assembling the Cm matrix

Sigma = 1.0

Lambda = 40.0

Lambda0 = 159.1631677112316

Solver creation¶

[15]:

# Create a solver object that will assemble the full problem

slv = multiflt('Coupling Northern Chile', [edge, fault, transform])

slv.assembleGFs()

slv.assembleCm()

---------------------------------

---------------------------------

Initializing solver object

Not a fault detected

Number of data: 635

Number of parameters: 568

Parameter Description ----------------------------------

-----------------

Fault Name ||Strike Slip ||Dip Slip ||Tensile ||Coupling ||Extra Parms

Edges ||None ||None ||None || 0 - 8 ||None

-----------------

Fault Name ||Strike Slip ||Dip Slip ||Tensile ||Coupling ||Extra Parms

Megathrust ||None ||None ||None || 8 - 564 ||None

-----------------

Fault Name ||Strike Slip ||Dip Slip ||Tensile ||Coupling ||Extra Parms

All transforms ||None ||None ||None ||None || 564 - 568

Problem solving¶

Here, we first solve the Generalized least squares problem first to get an good a priori. Then, we use ConstrainedLeastSquares to solve the problem for coupling being between 0 and 1. It takes a bit of time because we have a lot of parameters.

[16]:

# Solve the Generalized least square problem for a priori

slv.GeneralizedLeastSquareSoln()

# Create a list of tuples for the bounds

bounds = []

for i in range(edge.N_slip):

bounds.append([0.0, 1.0])

for i in range(fault.N_slip):

bounds.append([0.0, 1.0])

for i in range(transform.TransformationParameters):

bounds.append([-1000., 1000.])

# Get the prior model

mprior = slv.mpost.copy()

# Solve

slv.ConstrainedLeastSquareSoln(bounds=bounds,

iterations=2000,

method='L-BFGS-B',

mprior=mprior,

tolerance=1e-07,

maxfun=1e10,

checkIter=True)

---------------------------------

---------------------------------

Computing the Generalized Inverse

Computing the inverse of the model covariance

Computing the inverse of the data covariance

Computing m_post

Magnitude is

8.897480779921995

---------------------------------

---------------------------------

Computing the Constrained least squares solution

Final data space size: 635

Final model space size: 568

Computing the inverse of the model covariance

Computing the inverse of the data covariance

Performing constrained minimzation

RUNNING THE L-BFGS-B CODE

* * *

Machine precision = 2.220D-16

N = 568 M = 10

At X0 204 variables are exactly at the bounds

At iterate 0 f= 4.23787D+03 |proj g|= 9.51858D+02

At iterate 1 f= 3.86096D+03 |proj g|= 1.05903D+02

At iterate 2 f= 3.25515D+03 |proj g|= 1.26229D+02

At iterate 3 f= 2.23039D+03 |proj g|= 1.99656D+02

At iterate 4 f= 2.07175D+03 |proj g|= 2.12526D+02

At iterate 5 f= 1.82016D+03 |proj g|= 2.05972D+02

At iterate 6 f= 1.63063D+03 |proj g|= 1.67115D+02

At iterate 7 f= 1.43155D+03 |proj g|= 8.32178D+01

At iterate 8 f= 1.34318D+03 |proj g|= 1.75499D+01

At iterate 9 f= 1.28240D+03 |proj g|= 1.39552D+01

At iterate 10 f= 1.25600D+03 |proj g|= 1.36201D+01

At iterate 11 f= 1.23669D+03 |proj g|= 1.20065D+01

At iterate 12 f= 1.21398D+03 |proj g|= 9.46010D+01

At iterate 13 f= 1.20727D+03 |proj g|= 1.38180D+01

At iterate 14 f= 1.20490D+03 |proj g|= 6.77287D+00

At iterate 15 f= 1.20236D+03 |proj g|= 6.37444D+00

At iterate 16 f= 1.19943D+03 |proj g|= 5.97104D+00

At iterate 17 f= 1.19607D+03 |proj g|= 5.27748D+00

At iterate 18 f= 1.19518D+03 |proj g|= 4.52760D+00

At iterate 19 f= 1.19429D+03 |proj g|= 3.95657D+00

At iterate 20 f= 1.19373D+03 |proj g|= 4.27344D+00

At iterate 21 f= 1.19311D+03 |proj g|= 4.55391D+00

At iterate 22 f= 1.19252D+03 |proj g|= 4.79954D+00

At iterate 23 f= 1.19202D+03 |proj g|= 4.62901D+00

At iterate 24 f= 1.19172D+03 |proj g|= 4.33899D+00

At iterate 25 f= 1.19138D+03 |proj g|= 1.69178D+01

At iterate 26 f= 1.19102D+03 |proj g|= 3.30492D+00

At iterate 27 f= 1.19074D+03 |proj g|= 3.19851D+00

At iterate 28 f= 1.18996D+03 |proj g|= 2.84733D+00

At iterate 29 f= 1.18975D+03 |proj g|= 5.87111D+00

At iterate 30 f= 1.18939D+03 |proj g|= 3.80785D+00

At iterate 31 f= 1.18907D+03 |proj g|= 2.61530D+00

At iterate 32 f= 1.18887D+03 |proj g|= 3.45906D+00

At iterate 33 f= 1.18846D+03 |proj g|= 5.16570D+00

At iterate 34 f= 1.18809D+03 |proj g|= 3.05463D+00

At iterate 35 f= 1.18783D+03 |proj g|= 2.87141D+00

At iterate 36 f= 1.18770D+03 |proj g|= 1.09405D+00

At iterate 37 f= 1.18760D+03 |proj g|= 6.22013D+00

At iterate 38 f= 1.18755D+03 |proj g|= 8.96514D+00

At iterate 39 f= 1.18750D+03 |proj g|= 3.02007D+00

At iterate 40 f= 1.18747D+03 |proj g|= 1.41126D+00

At iterate 41 f= 1.18742D+03 |proj g|= 2.35793D+00

At iterate 42 f= 1.18738D+03 |proj g|= 2.33347D+00

At iterate 43 f= 1.18733D+03 |proj g|= 6.49950D-01

At iterate 44 f= 1.18730D+03 |proj g|= 6.47515D-01

At iterate 45 f= 1.18729D+03 |proj g|= 5.90285D-01

At iterate 46 f= 1.18728D+03 |proj g|= 9.97420D-01

At iterate 47 f= 1.18727D+03 |proj g|= 5.12637D-01

At iterate 48 f= 1.18727D+03 |proj g|= 5.00268D-01

At iterate 49 f= 1.18727D+03 |proj g|= 8.80232D-01

At iterate 50 f= 1.18726D+03 |proj g|= 2.49429D-01

At iterate 51 f= 1.18726D+03 |proj g|= 4.10523D-01

At iterate 52 f= 1.18726D+03 |proj g|= 5.27280D-01

At iterate 53 f= 1.18726D+03 |proj g|= 7.81142D-01

At iterate 54 f= 1.18726D+03 |proj g|= 1.21804D-01

At iterate 55 f= 1.18726D+03 |proj g|= 1.11140D-01

At iterate 56 f= 1.18726D+03 |proj g|= 1.03091D-01

At iterate 57 f= 1.18726D+03 |proj g|= 8.99945D-02

At iterate 58 f= 1.18726D+03 |proj g|= 1.04865D-01

At iterate 59 f= 1.18726D+03 |proj g|= 8.24684D-02

At iterate 60 f= 1.18726D+03 |proj g|= 7.11680D-02

* * *

Tit = total number of iterations

Tnf = total number of function evaluations

Tnint = total number of segments explored during Cauchy searches

Skip = number of BFGS updates skipped

Nact = number of active bounds at final generalized Cauchy point

Projg = norm of the final projected gradient

F = final function value

* * *

N Tit Tnf Tnint Skip Nact Projg F

568 60 66 578 0 179 7.117D-02 1.187D+03

F = 1187.2589567979362

CONVERGENCE: REL_REDUCTION_OF_F_<=_FACTR*EPSMCH

[17]:

# Distribute

slv.distributem(verbose=True)

# Remove the transformation

transform.removePredictions([gnss, sar])

# Compute Synthetics

for data in [gnss, sar]: data.buildsynth(slv.faults, direction='c')

---------------------------------

---------------------------------

Distribute the slip values to fault Edges

---------------------------------

---------------------------------

Distribute the slip values to fault Megathrust

---------------------------------

---------------------------------

Distribute the slip values to fault All transforms

Show me the resutls¶

[18]:

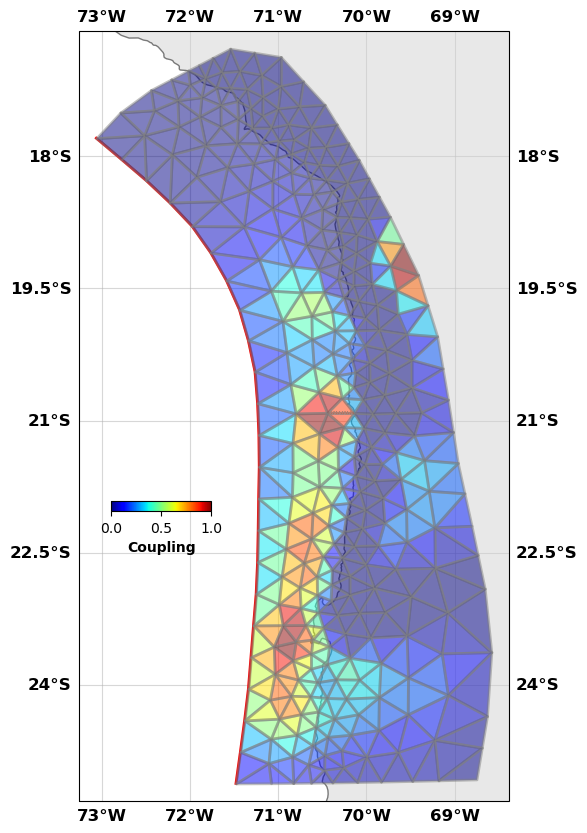

fault.plot(slip='c',

figsize=((10,10),(10,10)), alpha=0.5,

view={'elevation': 20, 'azimuth': 10, 'shape': (1, 1, 0.3)},

cbaxis=[0.33, 0.4, 0.1, 0.01], cblabel='Coupling')

[19]:

# Show me the InSAR data

sar.plot(plotType='decimate', data='data', faults=fault, box=[-72.1, -68., -24.5, -18.7], edgewidth=0, figsize=(10,10), cmap=cm.roma, norm=(-10,10),

colorbar=True, cbaxis=[0.6, 0.2, 0.1, 0.01], cblabel='LOS mm/yr', seacolor='lightblue', alpha=0.8)

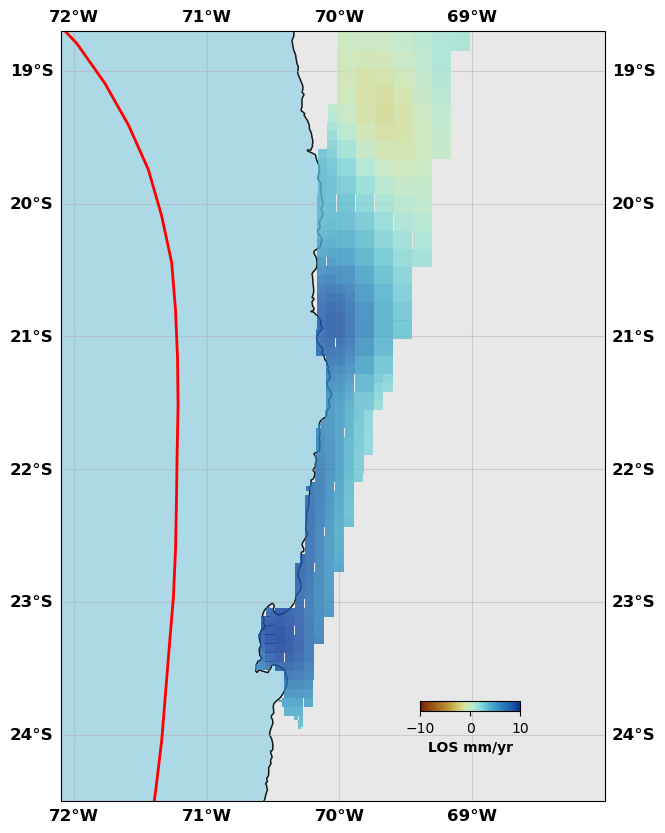

sar.plot(plotType='decimate', data='synth', faults=fault, box=[-72.1, -68., -24.5, -18.7], edgewidth=0, figsize=(10,10), cmap=cm.roma, norm=(-10,10),

colorbar=True, cbaxis=[0.6, 0.2, 0.1, 0.01], cblabel='LOS mm/yr', seacolor='lightblue', alpha=0.8)

sar.plot(plotType='decimate', data='res', faults=fault, box=[-72.1, -68., -24.5, -18.7], edgewidth=0, figsize=(10,10), cmap=cm.roma, norm=(-10,10),

colorbar=True, cbaxis=[0.6, 0.2, 0.1, 0.01], cblabel='LOS mm/yr', seacolor='lightblue', alpha=0.8)

[20]:

gnss.plot(data=['data', 'synth'], color=['k', 'r'],

faults=fault, figsize=(10,10),

seacolor='lightblue', title=None,

box=[-72.1, -68., -24.5, -18.7],

legendscale=20., legendunit='mm/yr', scale=40)

That’s all folks! Lots of tuning can be done, including better smoothing, better Green’s functions, better internal strain estimation. All of this is possible within CSI and the sky is the limit since you can implement what you want in CSI!